In a moment when artificial intelligence is experiencing a surge in popularity, concerns are being raised that the implementation of AI and other digital technologies in the mental health field could exacerbate existing power imbalances between professional institutions and service users.

Critical disability scholar and law professor Piers Gooding, in a new chapter from the Handbook of Disability: Critical Thought and Social Change in a Globalizing World, warns that the desire to implement AI in disability services and mental health comes fraught with ethical tensions, particularly when businesses prioritize profit and governments seek more invasive forms of control. While market and state forces look to exploit these new digital tools, Gooding argues that service users and disabled people risk being further exploited.

Gooding writes:

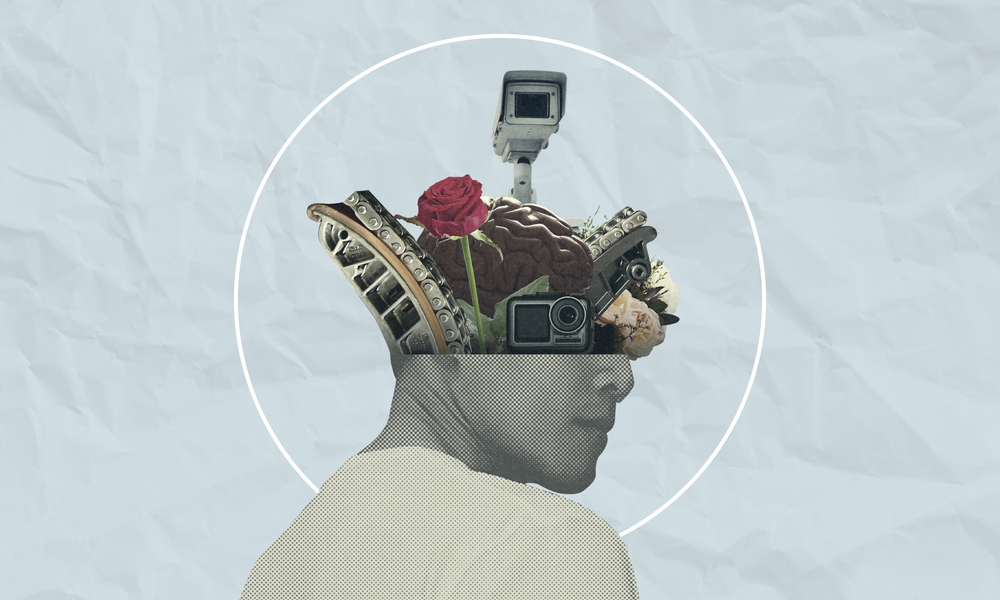

“The use of technologies like AI to make assumptions and judgments about who we are and who we will become poses greater risks than just an invasion of privacy; it poses an existential threat to human autonomy and the ability to explore, develop, and express our identities. It has clear potential to normalize surveillance in a way that is reminiscent of nineteenth-century asylums as a state-authorized (and often privately run) site of control but using twenty-first-century techniques of ubiquitous observation and monitoring.”