Government agencies have been utilizing AI programs for over two years to determine how best to allocate limited housing resources among homeless individuals. A new qualitative study, published as a preprint on arXiv, investigated the common concerns and feedback from stakeholders, shedding light on the limitations of these systems and emphasizing the significance of incorporating stakeholder input for future AI services that directly affect them.

Algorithmic systems in homeless services have become widespread in the US to address the growing homeless population. However, the study authors, led by Tzu-Sheng Kuo from Carnegie Mellon University, expressed concern that such systems have not adequately considered the perspectives of key stakeholders, including service providers and unhoused individuals:

“Despite this rapid spread, the stakeholders most directly impacted by these systems have had little say in these systems’ designs … To date, we lack an adequate understanding of impacted stakeholders’ desires and concerns around the design and use of ADS in homeless services. Yet as a long line of research in HCI and participatory design demonstrates, without such an understanding to guide design, technology developers risk further harming already vulnerable social groups or missing out on opportunities to better support these groups.”

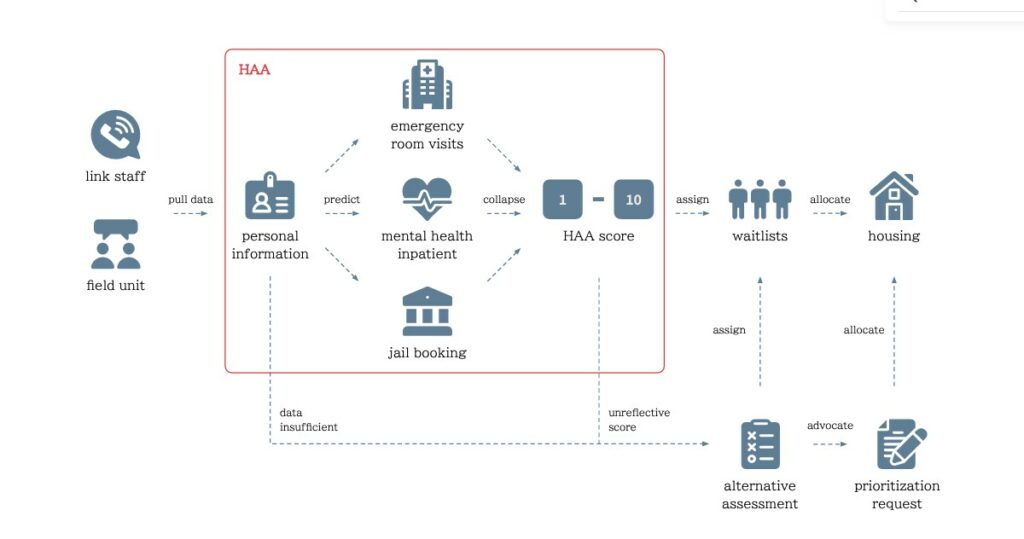

To address this gap, the authors conducted a qualitative study through one-on-one interviews with stakeholders, discussing the Housing Allocation Algorithm (HAA), an AI-based decision support system used in US counties to allocate housing to the homeless population.

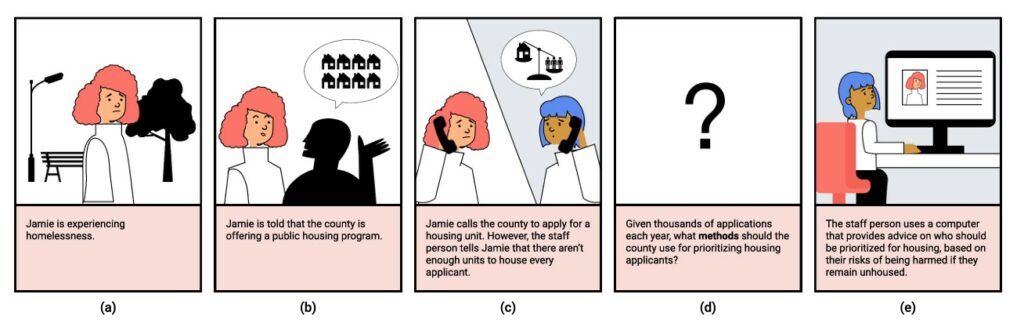

They employed comic board illustrations to depict various major components of HAA’s design, eliciting participants’ feedback, and concerns. After receiving participants’ responses, pre-selected responses were shared to facilitate further discussion and the generation of new ideas.

This methodology, utilizing illustrations and discussion, enabled the authors to overcome potential barriers to participation, such as AI literacy, reading literacy, social stigma, and social power imbalances.

The study analyzed responses from 21 participants (4 county workers, five non-profit service providers, and 12 currently or formerly homeless individuals) to identify common themes and concerns about using the Housing Allocation Algorithm (HAA) to prioritize housing.

The need for feedback opportunities on AI system design and usage

Both unhoused individuals and service workers expressed a strong desire for regular opportunities to learn about and provide feedback on the AI system. The authors assert that, when empowered, these stakeholders can offer detailed and relevant feedback on the AI design and should be encouraged to do so. Methods that address participation barriers, such as using comic board illustrations for individuals with reading difficulties, proved helpful.

Potential design flaws in the HAA

Participants raised concerns about using certain administrative data to score individuals, questioning the HAA’s reliability and ability to accurately and consistently identify vulnerable individuals with the most urgent needs.

Unreliable data sources for the HAA

According to responses, administrative data such as medical records can be misleading, outdated, and lacking in qualitative information needed to identify those in greatest need of housing correctly.

Participants argued that such data does not accurately reflect a person’s situation. Institutional violence and prior trauma were cited as reasons why unhoused individuals might avoid seeking help, resulting in lower priority scores in the HAA.

The importance of supporting frontline workers and enhancing decision-making capabilities

Frontline workers voiced concerns about feeling powerless when algorithmic scores did not align with the actual needs of homeless individuals. This sense of powerlessness or frustration was exacerbated when supervisors and organizations discouraged workers from understanding or overriding the AI’s algorithmic score. It was suggested that final decisions and scoring should involve workers rather than relying solely on a potentially flawed AI system.

Despite the study’s small sample size and focus on one specific AI system in a small US region, the implications are clear. There are fundamental limitations in designing and deploying AI systems that dictate housing prioritization for vulnerable populations. These flaws could be significantly improved by seeking feedback from diverse stakeholders.

The authors suggest that using comic board illustrations as a communication method can effectively gather crucial feedback and concerns from community members across all backgrounds and literacy levels.

****

Kuo, T., Shen, H., Geum, J., Jones, N., Hong, J., Zhu, H., & Holstein, K. (n.d.). Understanding Frontline Workers’ and Unhoused Individuals’ Perspectives on AI Used in Homeless Services. arXiv. (Link)