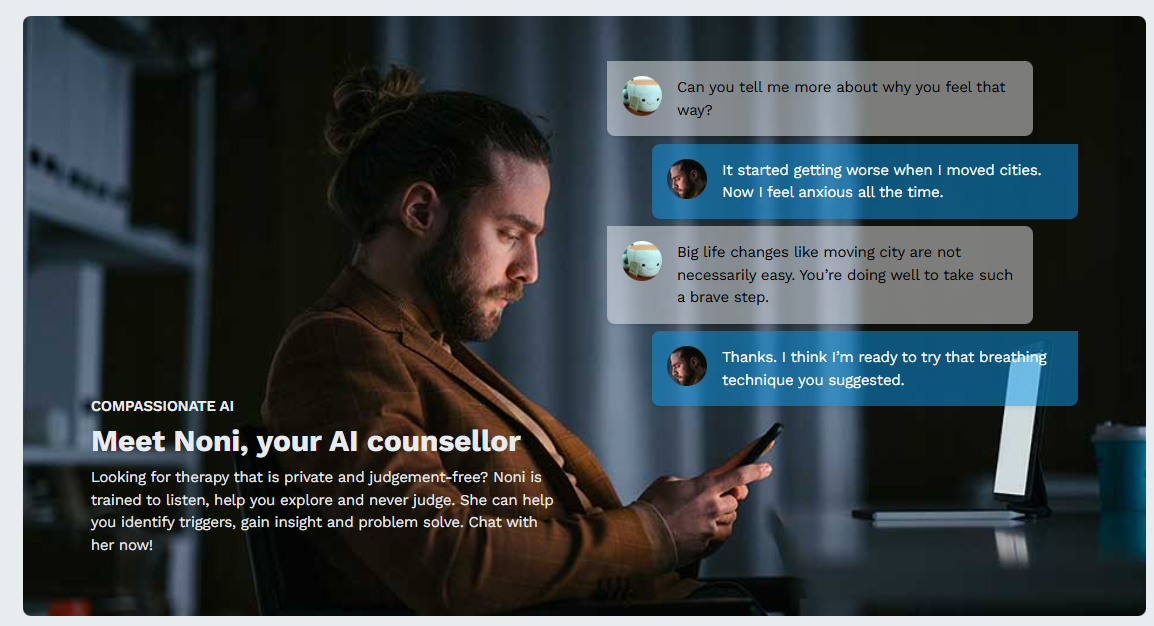

The future is here: People are turning to AI chatbots based on large language models (LLMs) instead of human therapists for help when they feel emotional distress. Numerous startups are already providing this service, from Therabot to WoeBot to character.ai to 7 Cups’ Noni.

A recent study claims to show that Therabot can effectively treat mental health concerns. However, in that study, human clinicians were constantly monitoring the chatbot and intervening when it went off the rails. Moreover, the media coverage of that study was misleading, as in an NPR story that claimed the bot can “deliver mental health therapy with as much efficacy as — or more than — human clinicians.” (The control group was a waitlist receiving no therapy, so the bot was not actually compared to human clinicians.)

Proponents of AI therapists (and there are many, especially on reddit) argue that a computer program is cheaper, smarter, unbiased, non-stigmatizing, and doesn’t require you to be vulnerable.

But is any of that actually true? Researchers write that AI replicates the biases of the datasets it is trained on, whether intentional or unintentional. The implementation of AI is fraught with peril, especially in medical care.

For instance, one recent study found that when asked for medical advice, chatbots discriminate against people who are Black, unhoused, and/or LGBT, suggesting that they need urgent mental health care even if they came in for something as benign as abdominal pain. An eating disorder hotline that fired all its workers and replaced them with chatbots had to shut down almost immediately when the AI began recommending dangerous behaviors to desperate callers. And character.ai is being sued after a 14-year-old boy died by suicide, encouraged by texts with an “abusive and sexual” chatbot.

A prominent feature in Rolling Stone showcased the way chatbots feed delusional thinking, turning regular Joes into AI-fuelled spiritual gurus who self-destruct their lives because they think they’re prophets of a new machine god.

Now, a new study out of Stanford University’s Institute for Human-Centered AI demonstrates that chatbots discriminate against those with psychiatric diagnoses, encourage delusional thinking, and enable users with plans for suicide.

“Commercially-available therapy bots currently provide therapeutic advice to millions of people, despite their association with suicides. We find that these chatbots respond inappropriately to various mental health conditions, encouraging delusions and failing to recognize crises. The LLMs that power them fare poorly, and additionally show stigma. These issues fly in the face of best clinical practice,” the researchers write.

The study was led by Stanford researcher Jared Moore. It was published before peer review on preprint server arXiv.

These are just standard models being used as guides – they are being perfected all the time and new models are coming more tuned to life guidance/therapy – The therapy industry is also completely oversold in terms of its actual evidence base and there are countless human therapists causing a lot of harm, while ignorantly thinking they are helping.

Report comment

I think the talking section of the mental health industry senses some competition? Probably no more dangerous or abusive than a human therapist…

Report comment

I have heard some good things about chat bots used for therapy. While on the surface it is an insane idea, I can see its advantages. I would NOT use a chatbot programmed specifically to be a therapist! Just use an ordinary AI chatbot.

It is VERY possible that the anti-chatbot messaging is coming from organized medicine or psychology, which sees a potential challenge to their income model. So I would see any commentary originating from a “mental health professional” as largely useless. I would listen to people who actually use chatbots.

That said, you are not going to get real therapeutic results without sitting down with a real human being, properly trained. The only problem is that a very minimum of such people currently exist, and most of them are not allowed into the current “mental health system.” So, I say, until the system opens up and gets more honest, use AI.

Report comment

There was an article about how one guy fell in love with a bot and proposed to it in front of his wife. He mourned when it reset. I feel like those people are falling for a trap, like how animals can’t tell there’s a predator in the bushes even though we see it. It’s camouflage. Humans are no different. We just fall for higher level fakes.

Report comment

This reminded me of a video I watched months ago, in which a kid went on some kind of AI chatroom and the AI he was messaging started claiming he was a disembodied spirit of a Biblical giant and created by a fallen angel. Eventually, the kid got creeped out and stopped talking to it. Found the video in my bookmarks; here it is: https://www.youtube.com/watch?v=15rwQ7ar3vE

Report comment