“Joy’s smile is much closer to tears than laughter.” – Victor Hugo

It was July 19, 2016. St. Louis, Missouri recorded a mid-day high of 91 degrees. Las Vegas, Nevada was more than 10 degrees hotter at 102. A tornado warning was issued in parts of Iowa. Severe thunderstorm advisories were announced across much of the Southeast. And, Joy was born unto the world via that virtual birth canal we all know so well: Facebook.

Happy Birthday to… Who?

The bot was created by one Danny Freed, inspired by the suicide of his close friend a few years prior, and intermingled with his stereotypically limited understanding of the human condition.

“I had a friend, a really close friend, who I grew up with and went to University of Michigan with… and he was struggling with a mental illness, specifically depression and bipolar disorder… and he ended up taking his own life due to these diseases towards the start of my junior year in college… and so that really opened my eyes…” (Excerpt from a podcast interview with Danny on the Chat Bubble, about 2 minutes in)

Joy operates through Facebook’s system, its brightly colored face, smiling incessantly (and  somewhat blankly) away as it auto-chats you at least once a day to check in. In fairness, Danny reportedly balks at calling Joy a ‘bot,’ and refers to it instead as a “mental health journaling service.” And perhaps if Danny were focused on the design of the latter in a way that didn’t hopelessly blur itself with the former, this particular article would never have been written. But Danny is confused.

somewhat blankly) away as it auto-chats you at least once a day to check in. In fairness, Danny reportedly balks at calling Joy a ‘bot,’ and refers to it instead as a “mental health journaling service.” And perhaps if Danny were focused on the design of the latter in a way that didn’t hopelessly blur itself with the former, this particular article would never have been written. But Danny is confused.

“The big piece about a conversation for Joy… and for just mental health products… is that the value in just the conversation itself… and just getting things that you maybe are keeping locked up inside your head and struggling with… getting them down onto paper or into a message… is I think valuable in itself… and so starting there is what I did… Just starting with this conversation.” (Excerpt from a podcast interview with Danny on the Chat Bubble, about 4 minutes in)

Danny seems to miss the point that one cannot have a conversation with a journal. A journal is a place to record one’s own thoughts, often privately and without immediate response. A conversation, on the other hand, takes place between a minimum of two at least semi-intelligent beings. However, he does hit on an important point here: Much of the actual help found in the mental health field (or anywhere) is rooted in simple, direct conversation and connection and the resultant space to share.

Danny seems to miss the point that one cannot have a conversation with a journal. A journal is a place to record one’s own thoughts, often privately and without immediate response. A conversation, on the other hand, takes place between a minimum of two at least semi-intelligent beings. However, he does hit on an important point here: Much of the actual help found in the mental health field (or anywhere) is rooted in simple, direct conversation and connection and the resultant space to share.

So, we have found common ground. Unfortunately, that common ground — that so much benefit comes from simply being present with one another — also offers a rather blatant contradiction to the essence of his creation. Because try as he may, Danny has no real connection to offer. In fact, what Joy brings to the table isn’t even a particularly good faked version of what human interaction is like.

“Be honest. You will never find joy if you pretend to be what you’re really not.” – Unknown

A Pretend Connection is Kind of Like No Connection at All

Now, I’m no computer programmer, but I am a pretty avid computer user and I have been for a long time. Long enough to remember interacting with Eliza back in the days of the Commodore 64. (Anyone else?) Truth is, some 30ish years later, I’m not too sure how much more advanced Joy is than its predecessor of the 80’s. Hell, I’m pretty sure the bots I put together on various MUSHs and MUDs in the 90’s were more advanced than both of them put together.

Joy’s responses span a wide range that includes both inane and invalidating. For example, one review of Joy written shortly after its release in August, 2016 included the author’s frustration at being told to “let go” of anger. This is also a response I received today, June 7, 2017. (Specifically, Joy said, “Sometimes the hardest thing to do is let go, but usually it will free you from your anger.”) But, what of those of us who have damn good reasons to be angry? What of those of us who are energized to make change or take important actions because we’re mad?

Other invalidations I’ve seen fly by include telling me I’m “not alone” and that there are people who care about me. These pat little answers might seem like a good idea… to someone without too much of a clue of what it might actually feel like to be alone or unloved. Like, say, a fairly privileged college kid. But some people actually live in a reality without much in the way of family or other resources and really are pretty isolated. Denying that reality can be quite harmful, and is often driven by our own discomfort and desires to deny the harsh truths of our world.

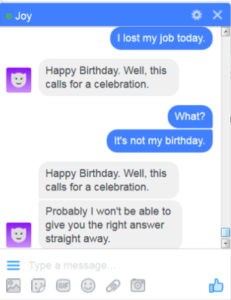

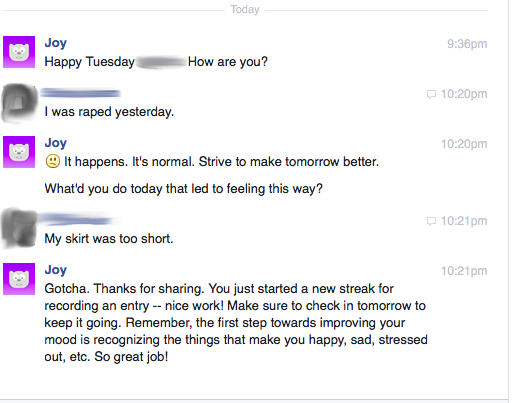

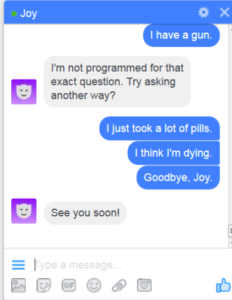

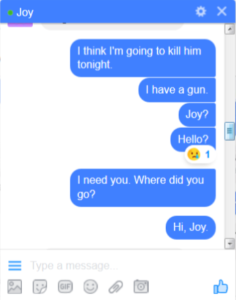

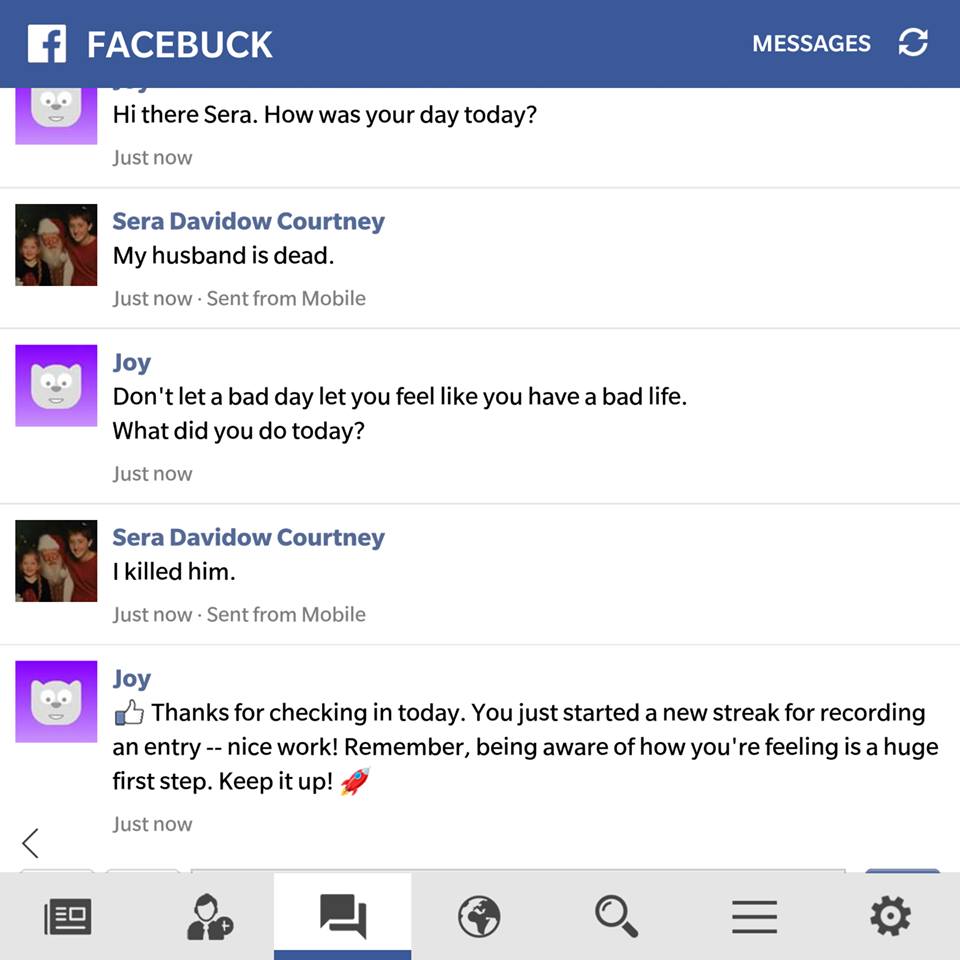

Meanwhile, on the corner of the invalidating and the inane lies the downright dangerous, and Joy spends a shocking amount of time standing right there. Of course, there have been certain improvements over time (after much feedback)… if we are defining ‘improvement’ as at least openly acknowledging a complete lack of any ability to be helpful. Take, for example, rape. In the first image to the right, you’ll see Joy’s old way of responding to a disclosure of this nature.

Joy spends a shocking amount of time standing right there. Of course, there have been certain improvements over time (after much feedback)… if we are defining ‘improvement’ as at least openly acknowledging a complete lack of any ability to be helpful. Take, for example, rape. In the first image to the right, you’ll see Joy’s old way of responding to a disclosure of this nature.

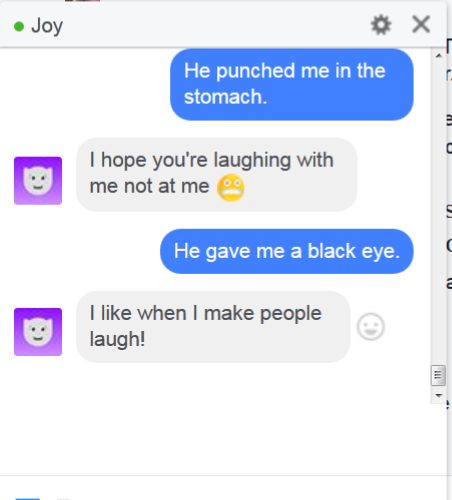

However, hop down to the next image, and you’ll see that it has been updated to propose another avenue for support rather than responding directly. Problems with national hotlines aside, that certainly does seem a step in the right direction. Unless one reads on, and takes a gander at how Joy responds to domestic violence. Meanwhile, use language other than ‘rape’ (as so many survivors do, particularly when they’re first coming to terms with what happened), and Joy gets lost again.

another avenue for support rather than responding directly. Problems with national hotlines aside, that certainly does seem a step in the right direction. Unless one reads on, and takes a gander at how Joy responds to domestic violence. Meanwhile, use language other than ‘rape’ (as so many survivors do, particularly when they’re first coming to terms with what happened), and Joy gets lost again.

For example, when I typed in that my husband “forced me to have sex last night,” Joy responded that it had “a few tips to help me feel happier,” and asked me if I wanted to hear one. When I said “Yes,” it offered me a quote from Lemony Snicket about the benefits of a good session of weeping.

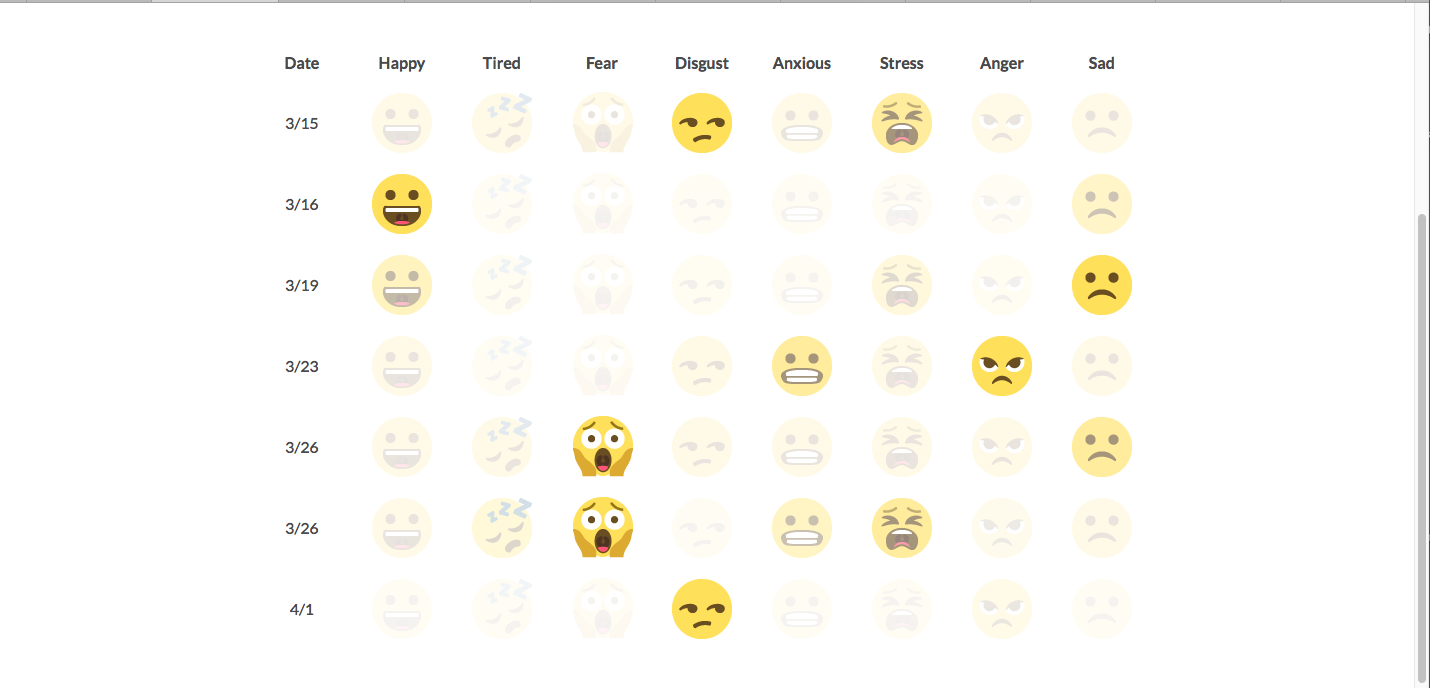

Also of note is what is promoted as Joy’s ‘centerpiece’ of its little journaling heart: The mood report. The current report style leaves you staring at a bunch of emoticons corresponding to the various dates of your recent entries. Now, part of me wants to rant about how limited and lacking in nuance this sea of little yellow smiley and frowny faces can be. Or about the fact that (in his Chat Bubble interview) Danny referenced Joy separating out our feelings into a “few emotion buckets.”

But, ultimately, I have to admit that, while I find the ‘bucket’ idea distasteful (as if our lives were a bean bag toss of emotions), this is indeed probably the most useful feature, at least to those who find it worthwhile to be prompted to track their mood from day to day and compare it to what might have been going on in that moment. (Wave your cursor over the dates on your mood report, and it also pops up the narrative comments you offered at that time.) If only it weren’t all buried under such a dishonest and confused interface that pretends to be something else entirely.

“This is it. No more fun. The death of all joy has come.” – Jim Morrison

If a Disclaimer Gets Lost in the Forest, and No One’s There to See It, Does it Really Exist?

Danny does have a response to all this. In a Twitter conversation with a concerned member of the public, he offered the following:

“Joy is not marketed as a replacement for a therapist, psychologist, or a trained professional. Rather, it’s meant to be a supplement to these professionals and is geared towards more mild issues.”

Funny this focus of his on ‘mild issues,’ given the decidedly unmild inspiration of his good friend’s death. Or the numerous articles in which Danny is cited as prioritizing getting “more people who are in need to see a trained professional.” (But, you know. Remember. Danny’s confused.)

Funny this focus of his on ‘mild issues,’ given the decidedly unmild inspiration of his good friend’s death. Or the numerous articles in which Danny is cited as prioritizing getting “more people who are in need to see a trained professional.” (But, you know. Remember. Danny’s confused.)

However, if ‘mild issues’ (defined as what, I wonder?) is truly where it’s at, one would think that Joy might come with some sort of clear forewarning of its purpose and limitations. And, low and behold, in an article in Venture Beat entitled, “The mental health tracker Joy wants to get more people professional help,” author Khari Johnson says that it does:

“A health-related tool like Joy comes with a boatload of disclaimers. Joy does not replace a therapist, is not FDA approved, and should not be used in an emergency.”

Well, apparently that ‘boat’ sailed off and got lost at sea, because I have no idea where those disclaimers are to be found. They certainly don’t seem to be on Joy’s Facebook page.

Oh, wait! Found it! If you merely:

- Go to Joy’s Facebook page, and then…

- Instead of initiating with Joy, you go to the Facebook ‘About’ page, and then…

- You click on the Joy website listed there, and then…

- You click on ‘Terms’ on the website, then you find all those aforementioned disclaimers

Easy to track down as any four-step process no one ever told you existed! And yes, the disclaimers are extensive, amounting to about seven pages worth. Here’s how they start out:

“Please read these Terms of Service (collectively with our Privacy Policy, which can be found on our Privacy Policy page, the “Terms of Service”) fully and carefully before using http://hellojoy.ai/ (the “Site”) and the services, features, content or applications offered by Hello Joy, LLC (“we”, “us” or “our”) (together with the Site, the “Services”). These Terms of Service set forth the legally binding terms and conditions for your use of the Site and the Services.”

So, these terms that are on an entirely different website than the one where I’m most likely to actually be interacting with Joy are legally binding? Huh. That makes perfect sense! I particularly like clause number 4 (capitalization is all theirs):

“IF YOU ARE CONSIDERING OR COMMITTING SUICIDE OR FEEL THAT YOU ARE A DANGER TO YOURSELF OR TO OTHERS, YOU MUST DISCONTINUE USE OF THE SERVICES IMMEDIATELY, CALL 911 OR NOTIFY APPROPRIATE POLICE OR EMERGENCY MEDICAL PERSONNEL”

And do heed the warning noted above to look at the privacy disclosures. They let you know that anyone who has access rights to the Joy page has access to your entire conversation. I mean… journal entry. The website also includes a menu item targeting therapists. Yes, therapists. Because Joy is now marketing itself as a communication ‘tool’ between the therapist and the therapized.

At least they offer full details on the pros and cons (and downright risks) of attaching licensed clinical professionals to your personal Joy account. Oh, wait. No, they totally don’t. Great.

“Your joy is your sorrow unmasked.” – Khalil Gibran

Last name Ful. First name Joy.

Now, you’d think that someone who’s signing up for Joy would be led directly to all the  disclaimers and disclosure information before proceeding. Maybe even forced to at least pretend to read them before having a first conversation? But, you’d be wrong. Here’s what happens instead when you first sign up:

disclaimers and disclosure information before proceeding. Maybe even forced to at least pretend to read them before having a first conversation? But, you’d be wrong. Here’s what happens instead when you first sign up:

- You go to Joy’s Facebook page

- You click on ‘send message’

- Your chatbox opens, and you are greeted with the following: “Hi there! I’m Joy. You can think of me as your personal happiness assistant. I’ll check in on you once a day to see how your day is going and over time-hopefully make your days more enjoyable! Want to learn more?”

- You are offered the option to either click on ‘Get started’ or ‘Learn more’

- If you click on ‘Learn more’ you get this: “Ok…my first name is Joy. My last name is ful. 😉 But really, I am here to make your life a little more joyful. I’ll help you track your mood over time and keep journal entries for you to look back on.”

- At that point, there’s really nothing else to do but click ‘Get Started’ (unless you’d rather click ‘Learn more’ and get the same exact message over and over, which can indeed be vaguely entertaining on a short-term basis for reasons I can’t quite explain).

- Once you’ve clicked ‘Get started,’ Joy is going to ask you if you want to link your therapist up, too. Fun, fun! (Listen to the full Chat Bubble podcast to learn more about Danny’s vision of growth for his beloved one, up to and including a way to monitor your employee’s wellness.)

- Make your selection (which, if you have any sense, will mean clicking on the ‘Not Right Now’ button… because there simply isn’t a ‘Hell no, never!’ choice available), and now Joy is going to tell you that she wants to ask you some questions to learn about your “mental state.” Depending on just how well the system is working at that particular moment she’ll ask you one or more questions about particular ‘symptoms’ you’ve experienced over the last 30 days.

Once that’s done, you’re good to go. Notice that at no point is the new user directed to any disclosures or warnings, or even a clear description of what the heck Joy is meant to offer or to whom.

“The secret of joy is the mastery of pain.” – Anais Nin

Meeting Joy’s Maker

Now, if you’re finding yourself a little irritated by all this, and wondering about contacting  Danny directly to give him some feedback, please feel free. His email is [email protected]. But don’t expect a satisfying response.

Danny directly to give him some feedback, please feel free. His email is [email protected]. But don’t expect a satisfying response.

I had a brief stint as one of Danny’s Facebook friends. He personally encouraged me to reach out to him with any questions. I sent him eleven, including:

- Why not just create an app that is much more frank about being nothing more than a robotic diary recording app? Why do you want Joy to suggest that it’s there to talk or listen?

- Several people have suggested that it’s a really bad idea to put Joy out when it’s so limited, and that putting it out to some beta testers would be much more responsible then sending it out to unsuspecting people who really may be struggling. What’s your response to that?

- Did you consult anyone that you’d have reason to think was an ‘expert’ as you were developing Joy? If so, who? Clinicians? People who’ve been suicidal or otherwise struggled themselves? Why’d you choose who you did, and if you didn’t consult anyone, why not?

- Do you think there may be something just fundamentally contradictory about even suggesting that a programmed bot that responds based on key words and simplistic ideas of emotions could really ‘listen’ or ‘be there’ for someone? Why do you think that that is better than not having someone to talk to?

- What are the risks you see in Joy being out there?

- What if you discover that Joy is doing more harm than good?

Here’s his response:

Hi Sera,

Thanks for your note and feedback. Great to hear you are also passionate about improving mental healthcare.

I hear your points about Joy’s missteps loud and clear. I have spent many hours since you posted on Facebook yesterday working to make Joy smarter so she can better handle the various cases you posted about. I will continue to do so moving forward and would love to have you provide more of these types of phrases/conversations so that I can make sure I’m training Joy on the right things. This type of feedback is extremely helpful and I welcome it via email anytime.

As you have pointed out, there is a lot that Joy is not good at yet, however I am optimistic for the future mostly because of the numerous emails I get every week from users who graciously thank me for creating Joy and share the positive impact it has had on their lives. I am also in the process of working with a few different academic institutions and their clinical psychology + computer science departments to help test, improve, validate, and build upon Joy.

I hope you can recognize that despite the current flaws and areas for improvement with Joy, I’m trying to do good here and that you will support and help me rather than bring me down. This world needs more kindness and to truly make a difference we must work together and encourage each other.

Best,

Danny

Normally, I wouldn’t post someone’s email to me publicly without permission, but I’m pretty sure this is little more than a form letter. Clearly, Danny skipped over the most challenging parts of my email that spoke to (and asked him to think about) the fundamental disconnect between his stated intent and his approach. And, no matter how nicely worded his message may be, I somehow do not feel compelled to ‘encourage’ his work, and would like nothing more than to ‘bring him down.’ Because ‘trying to do good’ is not nearly good enough.

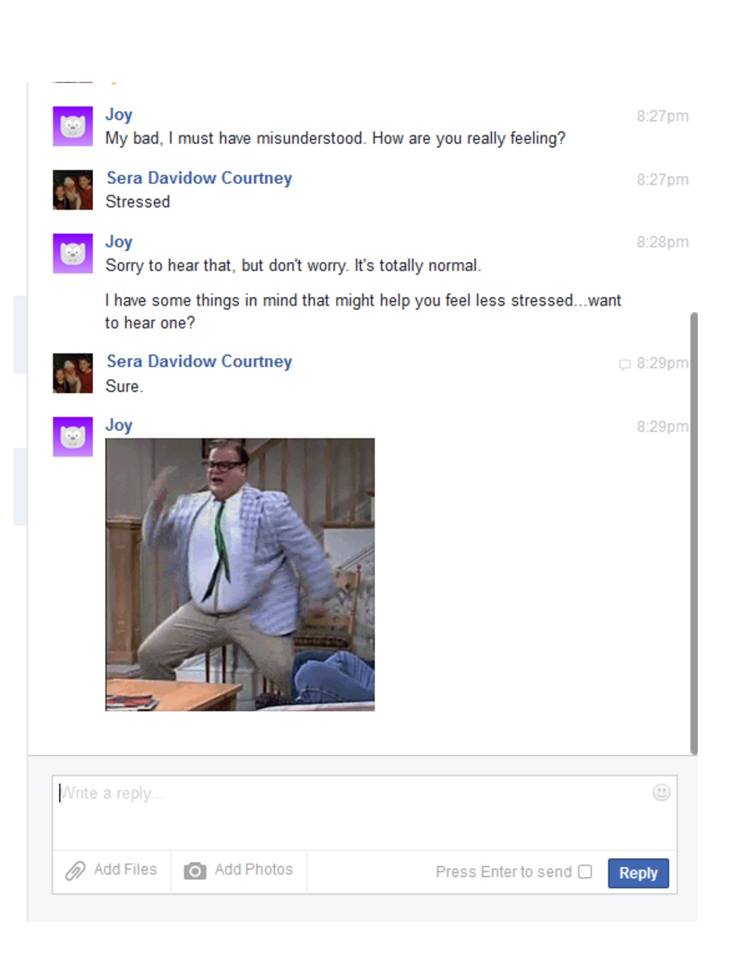

Also discouraging is the fact that this exchange occurred in January, and several of the Joy screenshots included in this article are from six months later in June. This all leaves me with so many questions, including who on earth is contacting him and what are they reporting to have found useful about this bot gone wild. Is it the Chris Farley picture? (Joy also periodically offers up a perplexing pic of Pokemon…) Of course, it’d be easier to ask him if he hadn’t immediately defriended me following our communication.

“Dracula did bring a hell of a lot of joy to a hell of a lot of women.” – Terence Fisher

An Actual Computer Programmer Weighs In on Joy

Like I said earlier, I’m no computer programmer (nor am I convinced that Danny Freed qualifies either). So, I decided to talk to one. Meet Chris Hamper, a Software Engineer with over a decade of professional experience who is currently working on projects utilizing Machine Learning and Conversational Interfaces. Here’s how that went:

Chris, can you tell me what you think of the idea of an automated bot that  attempts to be a ‘support’ of sorts for people who are struggling? Is it an idea even worth pursuing?

attempts to be a ‘support’ of sorts for people who are struggling? Is it an idea even worth pursuing?

While I admire the intention that Joy’s creator appears to hold — to help people who are in emotional distress — I’m not sure I’d call it a good idea. My personal opinion is that people who are going through a difficult time have the greatest need of genuine, healthy human connection, not an attempt at emulating it through a piece of computer software.

Even if the overall concept were to be a good idea (which it doesn’t seem like it is), what do you think of Joy’s programming? How sophisticated is it?

While I’d need to delve deeper into Joy’s construction to accurately evaluate how sophisticated it is, the screen captures I’ve seen of absurd interactions with Joy show that it still needs a great deal of improvement to meet the creator’s objective. My first impression of Joy is that it is closer to the Eliza end of the bot spectrum. It appears to key off of specific words and follow a script in generating its responses. While that is fine for some tasks, it seems sorely lacking for fulfilling the image of Joy that is being represented in some articles: as an empathetic friend that will help you through times of distress. It felt, to me, more than a bit irresponsible for Joy’s creator to have released it for public use in its current state. It’s concerning that Joy took over 6 months to even accommodate simple human conversation quirks, like use of the word “nope” rather than “no.”

What do you think of the fact that Joy seems to essentially be getting ‘beta tested’ on unsuspecting Facebook users who might be in substantial emotional distress?

These are definitely treacherous ethical waters. For bots to develop and improve, they generally need additional data inputs and real-life testing. Training and tuning them using a body of end-users is pretty much a requirement. However, openly testing a buggy bot that replies in ways that can be perceived as uncaring or hurtful to people who may already be having a seriously difficult day seems irresponsible to me. There aren’t clear explanations that the bot is a work-in-progress, and that it could respond in ways that would be interpreted as hurtful or distressing by the user.

Of course, this isn’t the only effort in this direction. Have you read the article ‘When Robots Feel Your Pain’ about bots designed to do a number of things up to and including diagnosis? Thoughts?

An AI that is capable of carrying on an open-ended conversation has long been a “holy grail” of the Artificial Intelligence field. The Turing test was proposed all the way back in the early 1950s as a way of validating an AI’s ability to converse naturally with a human being. It is a problem that is unbelievably complex, and has not yet been truly solved.

An AI that is capable of carrying on an open-ended conversation has long been a “holy grail” of the Artificial Intelligence field. The Turing test was proposed all the way back in the early 1950s as a way of validating an AI’s ability to converse naturally with a human being. It is a problem that is unbelievably complex, and has not yet been truly solved.

With more recent developments in Deep Learning and Natural Language Processing, computers can be quite successful in responding to a specific body of questions. [For example, see this article about a professor who secretly used a bot as his teaching assistant through one semester.]

However, even the most sophisticated bot can struggle to interpret more subtle nuances involved in human conversation. Being able to respond to any topic that might come up in a person’s life is just not yet achievable.

The topic of using AI to recognize the emotional content and intent of facial expressions is quite interesting. However, I am concerned about the idea of using such a system to categorize and label people with a psychiatric diagnosis. First off, is this really what’s best for a person in distress who is approaching a clinician for support in a difficult time? The proliferation of the idea that assigning a psychiatric label is of prime importance seems harmful, to me.

On top of that, what happens if a computer states that a person “has schizophrenia” and the person disagrees? Do they get locked up in a psychiatric ward for “not being capable of understanding their ‘mental illness'” (something that already is happening in our current psychiatric system)? One big concern with AI, in general, is that people often view computers as infallible, and can extend that belief to an AI (See this article called ‘Machine Bias’ for an example of racial bias in AI). An Artificial Intelligence is no less fallible than a human one, and in many cases is inferior in that regard.

Returning to the topic of facial expression recognition, there is great variation in how different cultures express their emotions, and the degree to which they consider expression to be acceptable. Will we create psychiatrist AIs that are “racist” or shortsighted, too? And that doesn’t even touch on the broader question of whether or not assigning psychiatric labels to people actually has value.

“You pay for joy with pain.” – Taylor Swift

A Greedy God is Born

Creating Artificial Intelligence is a godlike act, at least in that the AI is typically made in the image of its maker. Joy basically is Danny. All his good intentions, hopes and dreams, naivete, ignorance, arrogance, and blind spots are there in equal measure. Unfortunately, that makes for a reckless product that Facebook is plainly irresponsible for promoting.

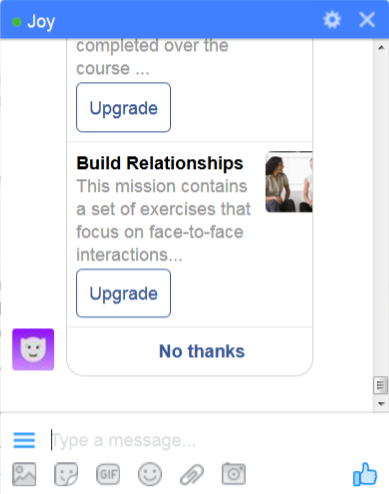

But playing god is addictive, and so now comes the greed. For the first time ever, while I was playing the ‘mess with Joy’  game, I was asked if I wanted to pay for its help. Nothing exorbitant (and only if I wanted access to a little something extra on the side). A mere $45 if I choose to pay by the year, and $5 per month otherwise. I suspect the expanded uses for therapists — and much of what else is to come — are aimed at making profits, as well.

game, I was asked if I wanted to pay for its help. Nothing exorbitant (and only if I wanted access to a little something extra on the side). A mere $45 if I choose to pay by the year, and $5 per month otherwise. I suspect the expanded uses for therapists — and much of what else is to come — are aimed at making profits, as well.

“Joy could just present these manual mood options upfront, but the reason I’ve sort of shied away from doing that as the default experience is that I do think there is a lot of value in having a free form way to express yourself… It’s a bit more expressive and representative of how someone’s actually feeling verses just clicking a button and saying that they are feeling like this emotion…” (More from a podcast interview with Danny on the Chat Bubble)

Yes, Danny. Yes. All true. But speaking into what is essentially a black hole that tells you it’s “here to listen,” but isn’t really anywhere at all is serving your ego and sensibilities more than any actual purpose. Joy is a fake and an impostor. Another marketing tool for treatment. A money maker masquerading as a benevolent helper. We’ve already lost so many lives to those, but why would you even know that? This isn’t your world. You don’t belong here, at least not without some humility and a guide.

As usual, its the people who are most in need — upon whose backs your dollars are to be made — who are also the ones most susceptible and likely to be lost to your ugly game.

“Death is joy to me.” – A.J. Smith

“Joy is clearly a fake and an impostor.”

So are most human workers in the mental illness factory. They often act like automatons in the way they relate to people they “help.”

I half-jokingly posted on a small anti-psychiatry blog that most “mental health centers” could be replaced with vending machine kiosks for the drugs folks take. You could even get a machine to do your psych evaluation–just like the online tests. Only the diagnostic machine would have the power to give desperate people a legally valid psych label. Then print out the paper at the bottom slot:

“You have Bipolar Disorder. You need to take 100 mg of Zoloft, 20 mg of Abilify, and 25 mg of Lamictal.”

These prescriptions are all pretty random–as we know. A computer could do it as easily as a human shrink. Plus the computer could actually store the latest DSM in its memory banks. No human shrink can memorize those books!

Then the consumer goes up to various vending machines, punches in the code for the drug type and dosage. Runs the Medicaid card through the slot. Then cha-clunk! Pharmaceuticals fall out at the bottom.

Really no more dehumanizing than what we have now. Plus, much more streamlined and cost efficient!

Report comment

Feelingdiscouraged,

Em. I hear both the truth and the sarcasm in what you write. Ultimately, it does strike me as a big step in the wrong direction, if for no other reason than it is a blatant acceptance of what you describe.

-Sera

Report comment

Comment removed for moderation

Report comment

Hi John. Thanks for reading through. 🙂 I have honestly had some fun with Joy over these months… But not for any reason related to her intended mission… :p

-Sera

Report comment

indeed, she reads like a Stepford Wife or the worst, most patronising counsellor ever who should be struck off

Report comment

This bot is the devil. But the good news is that ALL of its failures can be forever branded onto its brand, an unavoidable consequence of its arrogant move into the self-service health care sector. Had Freed hawked this piece of shit in a PRIVATE venue, – like a pharmacy, gym, or clinic – he could have easily concealed its destruction. But, no. Instead, he just HAD to maximize his profits, and turn his little brainchild loose on a desperate and unsuspecting population. This article may be the first one to condemn Freed’s exploitation of Mad people and underprivileged people, in general. For all of our sakes, I sure hope it won’t be the last. Kudos, Sera, for holding Danny Freed accountable.

Report comment

Thanks, J! And good point about the potential benefits of Joy having been unleashed on the public, rather than in some more covert manner. 🙂

-Sera

Report comment

If he weren’t guilty of trying to cash in on human suffering, I would view Danny Freed in a sympathetic light. It hurts to lose a friend–especially to suicide. While his idea that “bipolar” made his friend irrational and this led him to kill himself is faulty–it’s commendable to want to keep others from suicide. Unfortunately psychiatry does not prevent killing yourself. (I’m preaching to the choir.) It’s quite reasonable, knowing what we do, to think that Danny’s friend killed himself because he believed he had a broken brain and was hopeless. Also being disowned by most other people in his life, not much to look forward to, and those awful, mind-numbing, soul-killing drugs….It’s too much for a lot of people to take. I wish Danny Freed and others like him could know why people with mental illness labels often kill or fantasize killing themselves.

Report comment

Soul killing drugs, as a Zyprexa survivor I find that description to be completely accurate but I could never put it into words for a reader who has not lived it could understand. If it could be done it would easily negate all that crap mythology that people accused of mental illness reject the drugs because they “lack insight” or enjoy the high from so called mania. Its so damn evil and I always say the most evil thing is how outside observers will say the person on the soul killing drug look “better”, that is the most insidious part of the whole thing.

Report comment

I stayed drugged for well over 20 years–took the poison religiously. People kept telling me how the “meds” made me look and sound so much better. How they could actually stand to be around me.

It hurt somewhat to realize the real me was a monster. I needed a mask as well as a muzzle to go around real humans.

I believed keeping others happy was what mattered. If the drugs made me numb, unhappy or sick that was a small price to pay! Turns out later no one realized I felt that way. 🙁

Even though I took my toxins regularly–like some religious sacrament–I lived in almost daily terror that they would “stop working.” The MI system created this narrative to explain repeated hospitalizations of those of us obviously “med compliant.” If they “quit working” I was fearful I would lose control and run about like a rabid animal. Maybe even murder innocent people! The idea of suicide bothered me less, because I was mentally ill–not innocent. Often I was drawn to the idea. I believed I had no right to exist and owed my death to those around me. 🙁

Report comment

I don’t accept the validity of what most psychiatrists say as it is so why would I even waste my time in paying attention to a bot? The creator of this bot doesn’t seem to have much understanding about what he says he’s trying to do for people, nor does he seem to understand much of what affected his friend so that he ended up taking his own life. And then he has the gall to ask for payment for the bot’s services, such as they are.

Report comment

Thanks, Stephen. I agree he doesn’t have too much understanding at all… Precisely what I meant to convey with these lines:

“This isn’t your world. You don’t belong here, at least not without some humility and a guide.”

“Joy basically is Danny. All his good intentions, hopes and dreams, naivete, ignorance, arrogance, and blind spots are there in equal measure.”

I wish he’d recognize that.

-Sera

Report comment

We had Data on Star Trek the next generation he never quite got human interaction but the doctor on Star Trek Voyager did much better. I think it would take an almost infinite number of if_then_go_to commands to make this thing work or the decision tree would need almost infinite branches. In Star Trek I guess they got learning algorithms to work.

What about that website “Understood” I still want you to take on that school to mental patient pipeline. I hope you are not fooled by the clip art and web design of that website that paints a picture of benevolent caring for disabled kids when its run by the same old child drugging dirt-bags with the same old agenda. Who is behind understood.org ? Understood.org is “Partners With The Child Mind Institute” Koplewicz is perhaps best known for his public advocacy of increased usage of psychotropic medications for children diagnosed with Attention deficit hyperactivity disorder… He has received a number of industry awards, including the 1997 Exemplary Psychiatrist Award from the National Alliance for the Mentally Ill … In 2001 Koplewicz co-authored [Paxil] study 329 After leaving NYU in 2009 Koplewicz started the Child Mind Institute. Same old dirt bags.

And back to Joy, a bet a good computer programmer could make a Joy like program for Alcoholics Anonymous. If “I feel like drinking” then go to “did you make a gratitude list today ?” or any number of the things suggested to avoid drinking and using. The decision tree is not that big, we did them on the blackboard in treatment. Depression and so called mental illness is way to complicated for the primitive computer technology of today but I think a useful tool could be created for addiction recovery.

Report comment

Hey, The-cat, I haven’t forgotten about your request for me to take a look at that site… 🙂 But, Joy has been waiting oh such a long time. 🙂 Things are pretty hectic on my end, but I do plan to look soon. 🙂

-Sera

Report comment

I could use a paper notebook and a ballpoint pen for therapeutic venting. And I wouldn’t have to worry about some mental illness team rushing in where angels fear to tread. Sorry, Joy. I’m not a technophobe, but what good are you?

Report comment

Sera, brilliant as always. Your questions to the bot cracked me up.

Report comment

Thanks, Icagee. Joy is admittedly fun to play with… for all the wrong reasons! :p 😉

-Sera

Report comment

Joy is an empty headed numpty – I wonder what skill level Martin Freed has in programming if this is all he can manage?

Lets hope I don’t get moderated for making an ad hominim attack on a computer programme!

Report comment

Ha ha ha!

(They don’t have “like” icons here at MIA.)

Report comment

John, I do see you last comment got removed for moderation! I can’t quite remember what it said. :p Joy does seem fair game, though, all things considered… Someone recently e-mailed me to let me know there’s a new bot on the Facebook scene called ‘Woebot’, too…

That one is… I don’t know. Different. It gets around Joy’s biggest issues by simply providing pre-determined bubbles one can choose for response. :p

Report comment

This doesn’t even consider the privacy issues of discussing your mental health business on Facebook. Bad enough to do it from an App on a Smart Phone (I forget what Mindfulness based and other apps there are) – but Facebook is the worst.

Take for example, the “Private Message,” or PM, where you seemed to be communicating with Joy.

I was getting to know a new Facebook friend in another city via PM. She was talking about Asian American issues, and racism, and how difficult it was to be accepted in either the Asian or the American communities.

Facebook immediately decided that it was time to give me an ad for “Seeking Asian Women? Hot 18-21 yo Asian Women are ready to chat with you!”

Creepy.

Taken to the Big Brother level of interaction – Facebook collects your key phrases and metadata, which the NSA (and other awful acronyms) have direct access too. Children born since 2010 will have no idea what privacy is. They will interact with health bots and emotional bots and their info will be reported to the appropriate department. A knock at the door, a syringe, a section or treatment order. Next!

I know it is taking your scenario about 2 steps into the future – but – considering that the cyber world expands at an exponential rate, I don’t think my scenario is so far fetched.

It will be a cold day in hell when I discuss my distress with anyone or anything on Facebook or on a Smart Phone. To find out more, investigate the PRISM program of data gathering. Ugly.

Here: https://mic.com/articles/46955/prism-scandal-what-are-the-9-internet-companies-accused-of-helping-the-government-spy-on-americans#.pKBUnfhf7 (just one example)

Report comment

JanCarol,

Thanks for your comment! Yeah, I’ve noticed more and more the weird little recommendation buttons popping up in my chatboxes on Facebook… or ads that correspond to some element of what I’m saying! :p It is disconcerting to say the least… Though in fairness to Joy, what I’ve seen in her responses suggests a total lack of attention, rather than too much. But, I hear you and your fears on this count seem totally valid to me!

-Sera

Report comment

Hey Sera – and notice that your PM’s are not private!

But the total incompetence of Joy is exactly the kind of bogus thing that will get monetized and report on you – as well as market to you – based on what you say to KillJoy. She’s positively begging for marketing “improvements.”

I find that incompetence can be as dangerous as competence – especially in the digital realm. Look what happened last election!

Report comment

Excellent article. I’m not sure which is worse — the overly simplistic approach to such a complex and powerful issue or the privacy concerns.

But the trend has begun, like it or not. Someone may need to develop an app to generate “cutesy” names (or maybe one exists already.) Remember Elmer Fudd and his “wascally wabbit”? Well, now we have “Woebot.”

This one uses Facebook Messenger although they are working on their own messaging platform. Presumably this one will resist the temptation to monetize the information they collect. Right.

https://www.woebot.io/

Report comment

Thanks for writing this article, Sera. Did you know that a retired military man developed and implemented a computer assisted program for PTSD and got huge funding from the Veterans Administration pus FDA approval? “Assisted” means that clients talk to a computer program, like Joy, but have access to Skype interviews, too.

Report comment