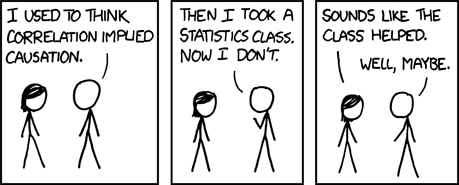

A new study has found that correlational studies may not use strict enough rejection criteria. This may mean that these studies make it too easy for researchers to conclude that their causal hypothesis is correct, despite the possibility of alternate explanations.

The study, just published in American Psychologist, was led by Drew Bailey at the University of California, Irvine. Bailey and his colleagues examined studies of children’s skill development—long-term studies that purport to demonstrate that children who are taught particular skills in school have a range of better outcomes in adolescence and adulthood. Bailey and the other researchers are critical of this research, suggesting that a number of alternative explanations might account for the findings.

In order to be considered valid scientific evidence, a study must be sufficiently “risky.” For a researcher’s conclusion to be accepted, it must be highly unlikely that the finding could be due to alternate explanations or due to chance. In statistics, researchers report the likelihood of their finding being due to other factors, which should be less than 5% (or 1% in stricter studies).

In order to be considered valid scientific evidence, a study must be sufficiently “risky.” For a researcher’s conclusion to be accepted, it must be highly unlikely that the finding could be due to alternate explanations or due to chance. In statistics, researchers report the likelihood of their finding being due to other factors, which should be less than 5% (or 1% in stricter studies).

However, if researchers can find an alternate explanation that is just as likely to be true, the study’s conclusion must be rejected. Thus, studies must be “risky” enough to sufficiently reduce the possibility of these alternate explanations.

Researchers may believe that they have sufficiently accounted for alternative explanations. After all, any well-conducted study will report on such potential confounders as socioeconomic status, cognitive ability, and pre-study variables. However, it is difficult to account for every possible variable in a study that follows the real-life experiences of people outside the lab.

Additionally, researchers often state that their correlational evidence does not constitute causal evidence. However, if researchers discuss the policy implications of their work, causality is implied. One would not advocate for a skill-building intervention, for instance, if one did not believe it could cause improved outcomes. Thus, according to Bailey, it is often disingenuous for researchers to claim that their conclusions do not imply causality.

The current study enabled Bailey and the other researchers to examine the findings from skill development literature, to determine if the experiments that show these alleged results are sufficiently “risky” to test for alternate explanations.

Bailey had several methods for doing this. First, he reviewed the experimental studies. Experimental studies allow researchers to control for more variables, and Bailey demonstrated that these alternative variables accounted for a larger-than-expected proportion of the results. These short-term studies should have shown a greater effect of the intervention because the gains would not have to be maintained over time. Instead, the skills-building intervention actually had a much smaller effect in these studies.

Second, Bailey used the principle of falsification to demonstrate that the same correlation could be found in areas that would be unexpected—particularly, in this case, that math skills correlated with later language skills. This finding means that it is unlikely that it is due to math skill building, and more likely another explanation, such as test-taking skills or school resources, that is being measured.

Finally, Bailey demonstrated that an alternative explanation could account for the original findings. He suggests a model of overall skills and unmeasured factors (such as school resources) which accounts for the findings just as well as a model of skill-building does. In addition, Bailey’s alternative explanation accounts for some of the unexpected findings as well, such as early math skill being linked to later literary achievement.

These three methods—additional experimental evidence, falsification, and suggesting alternative models—provide researchers with tools to help validate whether their studies are sufficiently “risky” tests of their hypotheses.

Bailey suggests that these methods could be used in many areas of research to ensure the improved validity of correlational models.

According to Bailey, “We believe that when exposed to riskier tests and informed by prior experimental work, correlational data analyses can also help triangulate to the most useful theories.”

****

Bailey, D. H., Duncan, G. J., Watts, T., Clements, D. H., & Sarama, J. (2018). Risky business: Correlation and causation in longitudinal studies of skill development. American Psychologist, 73(1), 81-94. http://dx.doi.org/10.1037/amp0000146 (Link)

This was an excellent pick for an article, thank you.

Report comment